April 11, 2023

Artificial Intelligence: the Use of Computational Models in Healthcare and its Ethics

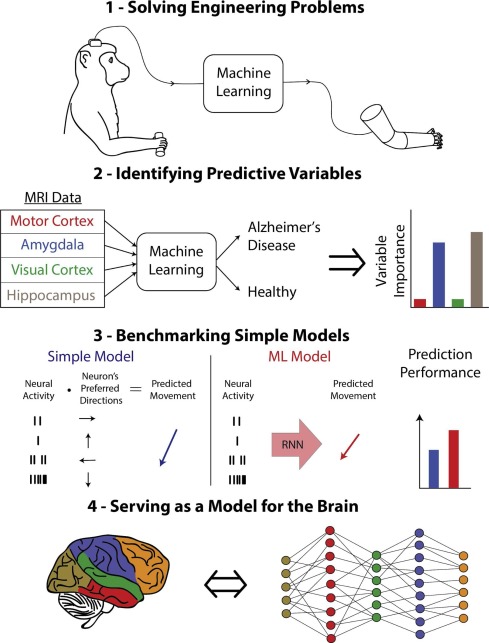

The ever-growing field of artificial intelligence (AI) development has led to some of the most revolutionary advances in modern human history. As researchers work to create artificial intelligence, the by-products of their efforts are finding their way into a broad spectrum of applications. One category of this research are the artificial intelligence computing models. While this category is broad, some important types to note within it are Machine-Learning (ML) and Deep-Learning (DL). ML focuses on the use of input data and the formation of algorithms to imitate the way that humans learn. Over time this software learns from user input, resulting in the improvement of its accuracy. DL on the other hand is a type of machine learning that utilizes artificial neural networks or ANN to form multiple layers of processing. DL systems are used to extract progressively higher-level features from data. ANN are intricate computing systems designed to simulate the natural neural networks of the human brain. This developmental technology has allowed computers to overcome humans in image classification, some boardgames, and enabled speech-to-text on most modern cell phones. [1] But there are uses for this technology beyond losing chess to a computer. With the wide variety of computational models being developed, uses for AI are expanding into fields other than the technology sector. With this advancing technology in mind, and man’s inability to fight this wave of knowledge, it is worth examining how this technology fits into the field of neuroscience.

The cross-section between math, technology, and neuroscience, or “cognitive computational” neuroscience [2], is expanding. With the dawning of new technology, professionals within the field of neuroscience are developing medical-decision support systems using these computational models.

These systems generally use one of three subtypes: supervised, unsupervised, or reinforced learning. Supervised learning forms a model that predicts outputs from data input. Unsupervised learning is focused on finding structure in data, i.e., clustering, dimensionality reduction, and compression. Reinforcement learning allows for a system to learn the best actions based on the result of the end of a sequence of actions. The pros and cons of each of these subtypes are still being examined, however, research has shown that there is a use for them in neurology.[3] One of the issues faced by the neuroscience community is the difficulty extracting qualitative information from measurements of neural activity. However, with the help of machine learning tools, neurologists might be able to understand large data sets of neural code and improve the accuracy of their medical applications. This in itself is a difficult feat as neural code is not one hundred percent understood.[1]

Much of the research being done in neurology involves imaging techniques. Within this realm of neuroscience, machine learning has become a crucial factor. An example of this is the use of deep convolutional neural networks (dCNNs) to predict cardiovascular risk factors, all from retinal fundus images. [1] Cardiovascular diseases (CVDs) are one of the leading causes of premature death. This makes the early identification of causal diseases a key step in prevention. Fundus retinal imaging is a non-invasive procedure that captures details of the rear inner surface of the eye with a specialized camera. In some instances, this can be done via an at-home kit. FRI’s common use has created a large body of usable data. However, processing this data is a challenging task in healthcare, requiring time and training beyond our current capacity. This is where automated systems come to play. ML models can take the information presented in these images and convert them into quantifiable input. These computational models perform analyses on these vast data sets at a speed and efficiency not possible in humans, expediting the identification of risk factors. [10] This ability to find potentially lifesaving information via noninvasive methods is just the start of what ML computational models could do. With the use of these new systems, technology could increase the rate of early diagnoses, giving patients a better chance for successful treatment.[4]

Twenty-five percent of adults, eighteen percent of adolescents, and thirteen percent of children in the U.S. have a mental disorder. [6] Of these neurological, laryngeal, and mental disorders, many present symptoms are usually associated with language impairment. Language impairment of this kind can be divided into three categories: fluency, voice, and articulation. Fluency disorders are associated with the unusual repetition of rhythms or sounds, voice disorders are classified as an abnormal tone, and articulation is the distortion of certain sounds. Some researchers in neuroscience are examining the way these speech abnormalities might be used to develop parameters for computational models tasked with assisting diagnosis. With disorders such as autism, Alzheimer’s, and depression, a patient’s speech patterns are typically significantly different from an individual without these disorders. [6] For instance, depressive speech may sound monotonous, uninteresting, or lacking energy. In contrast, a patient suffering from Alzheimer’s may use the incorrect choice or words. Because of this difference, ML models developed to analyze the acoustics of a depressed patient may be able to detect the disorder. Using DL systems for Alzheimer’s, while not impossible, is more nuanced. One of the drawbacks researchers faced with the implementation of these AI computational systems is tackling the divide between expected trends gathered from input data and the complexities of living things. A clear example of this divide can be seen through the differences in cultural speech patterns. For the Deep Learning programs to function over a wide population group, there must be ample data from different regions with different speech patterns. A person in Texas does not speak the same way someone from Wisconsin might. Without accounting for this gap, there is more likelihood an error will occur with the diagnosis of a disease like Alzheimer’s. [6]

Another use for ML models can be found with the workaround epilepsy. Epilepsy is one of the most common neurological disorders in the world. It is also relatively difficult to treat, often described as drug-resistant or refractory. While most people suffering from epilepsy can live full, normal lives, there does tend to be a trend of early death. One common treatment technique for epilepsy uses deep brain stimulations. It is known that these deep brain stimulations, which are continuous and don’t optimize their parameters, may result in many adverse side effects. However, because epilepsy is recurrent and tends to follow patterns based on previous episodes, it has given a base from which to work with to develop ML computational models. New research shows a development in technology that can be used to detect these seizures as well as control them, although not completely cease them. While the results so far are varied, there is ample indication that with time and further research, seizures might become easier to track and manage. [4] This is still however a great leap forward in neurology.

Machine Learning and Deep Learning while impressive, are not quite on par with the AI of the movies. There are still many conditions that must be met for their intended usage. One of the first aspects to consider is if the data being examined matches with the type of ML method being used for analysis. Much like regular data analysis, you can’t perform a quadratic regression on a cubic equation. Or if you did you would get utter nonsense. This means that users of the computational models utilized must intelligently select their method or else preprocess their data. However, there are workarounds to this condition, one of which is an automated ML method. An automated ML method goes through as many computational models as possible and selects the best-performing option. It does however take a considerable amount of time to run and as such is not suitable for real-time feedback. Although it’s capable of finding a proper method on its own, it is still beneficial that an ML developer be fluent in the type of data being examined. [1]

With DL systems comes their own brand of complexity. Because they are created to resemble the functionality and structure of the human brain, there are many hidden layers within a DL computational model. These extra layers are a vital part of this method. The additional complexity they bring allows for the fine-tuning of parameters and conditions, thus minimizing the sum differences between predicted outcomes and actual outcomes. Researchers are currently examining a way to optimize this method. One of these is the use of biological neuromodulation. Best defined as a mechanism that reconfigures itself, its hyperparameters, and connectivity, based on its environment and the behavioral state of the neural network. Although not completely understood yet, there is room for advancement within this field of research. [7]

It is important that when we look at the research being done in this field, we acknowledge that it is relatively new and very much still in development. Of the papers examined for this article, all were either published in the last five years or referenced studies from the last five years. Despite the newness of this technology, their appeal is clear: ANNs provide an innovative approach to tackling the complexities of not just our brains, but for their use as a tool for data analysis. By allowing for the formation of artificial models of complex behaviors, heterogeneous neural activity, and neural connectivity, MLs and ANNs are revolutionizing the field of neuroscience. It is with these improvements that computational models are becoming an integral part of modern neuroscience research. As technology advances, so does our ability to implement these advances into the field of neuroscience. From moving up the timeline of early diagnoses to examining large neuronal data sets for patterns difficult to find and understand by a human, it is because of this technological advancement that I believe researching the different uses of tech in the field is worth considering.

[1] J. I. Glaser, A. S. Benjamin, R. Farhoodi, and K. P. Kording, “The roles of supervised machine learning in systems neuroscience,” Prog. Neurobiol., vol. 175, pp. 126–137, Apr. 2019, doi: 10.1016/j.pneurobio.2019.01.008.

[2] W. Messner, “Improving the cross-cultural functioning of deep artificial neural networks through machine enculturation,” Int. J. Inf. Manag. Data Insights, vol. 2, no. 2, p. 100118, Nov. 2022, doi: 10.1016/j.jjimei.2022.100118.

[3] D. Nguyen et al., “Ensemble learning using traditional machine learning and deep neural network for diagnosis of Alzheimer’s disease,” IBRO Neurosci. Rep., vol. 13, pp. 255–263, Dec. 2022, doi: 10.1016/j.ibneur.2022.08.010.

[4] S. Nambi Narayanan and S. Subbian, “HH Model Based Smart Deep Brain Stimulator to Detect, Predict and Control Epilepsy Using Machine Learning Algorithm,” J. Neurosci. Methods, p. 109825, Feb. 2023, doi: 10.1016/j.jneumeth.2023.109825.

[5] T. T. Rogers, “Neural networks as a critical level of description for cognitive neuroscience,” Underst. Mem. Which Level Anal., vol. 32, pp. 167–173, Apr. 2020, doi: 10.1016/j.cobeha.2020.02.009.

[6] M. Sayadi, V. Varadarajan, M. Langarizadeh, G. Bayazian, and F. Torabinezhad, “A Systematic Review on Machine Learning Techniques for Early Detection of Mental, Neurological and Laryngeal Disorders Using Patient’s Speech,” Electronics, vol. 11, no. 24, p. 4235, 2022, doi: 10.3390/electronics11244235.

[7] J. Mei, R. Meshkinnejad, and Y. Mohsenzadeh, “Effects of neuromodulation-inspired mechanisms on the performance of deep neural networks in a spatial learning task,” iScience, vol. 26, no. 2, p. 106026, Feb. 2023, doi: 10.1016/j.isci.2023.106026.

[8] “The human brain inside a glass jar https://pixexid.com/image/the-human-brain- inside-a-glass-k1kxa9pn (accessed Mar. 12, 2023).

[9]“File:AI-ML-DL.svg – Wikipedia,” May 12, 2020. https://commons.wikimedia.org/wiki/File:AI-ML-DL.svg (accessed Mar. 30, 2023).

[10] R. G. Barriada and D. Masip, “An Overview of Deep-Learning-Based Methods for Cardiovascular Risk Assessment with Retinal Images,” Diagnostics, vol. 13, no. 1, Art. no. 1, Jan. 2023, doi: 10.3390/diagnostics13010068.

Tags from the story

AI

Biology

neuroscience

Nomination-Science

Maximillian Morise

A fascinating article on Artificial Intelligence and the potential it has for further use in healthcare and saving people’s lives, but also of the ethical challenges faced by it. Congratulations on your nomination!

30/04/2023

4:54 pm